Gemma 3 AI

Google's most advanced open AI model with 128K context window, multimodal capabilities, and support for 140+ languages.

Create powerful applications on a single GPU.

✨ Try Gemma 3 in your browser for free

Why Choose Gemma 3

Gemma 3 is Google's most advanced open model, built from the same research and technology that powers Gemini 2.0 models.

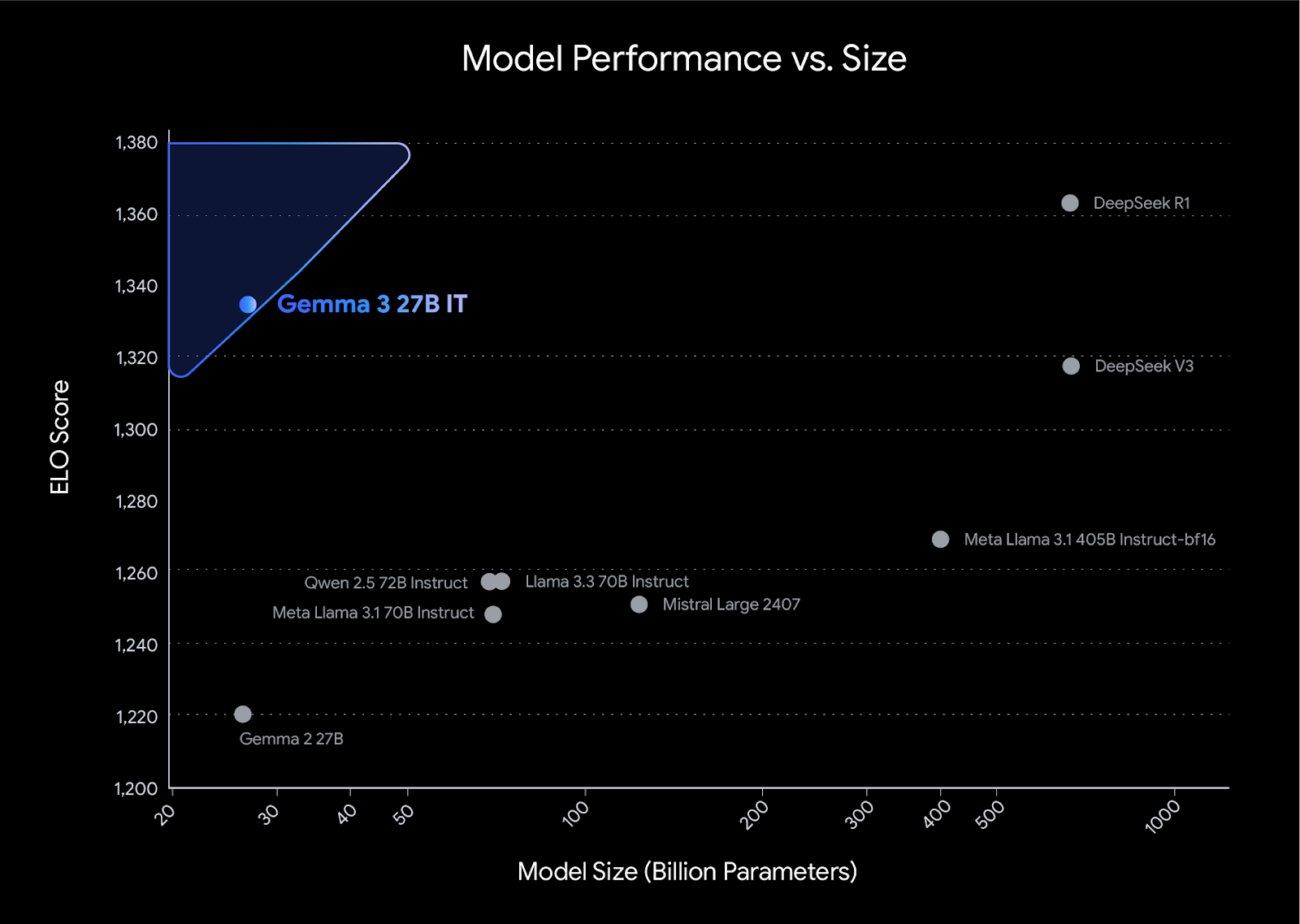

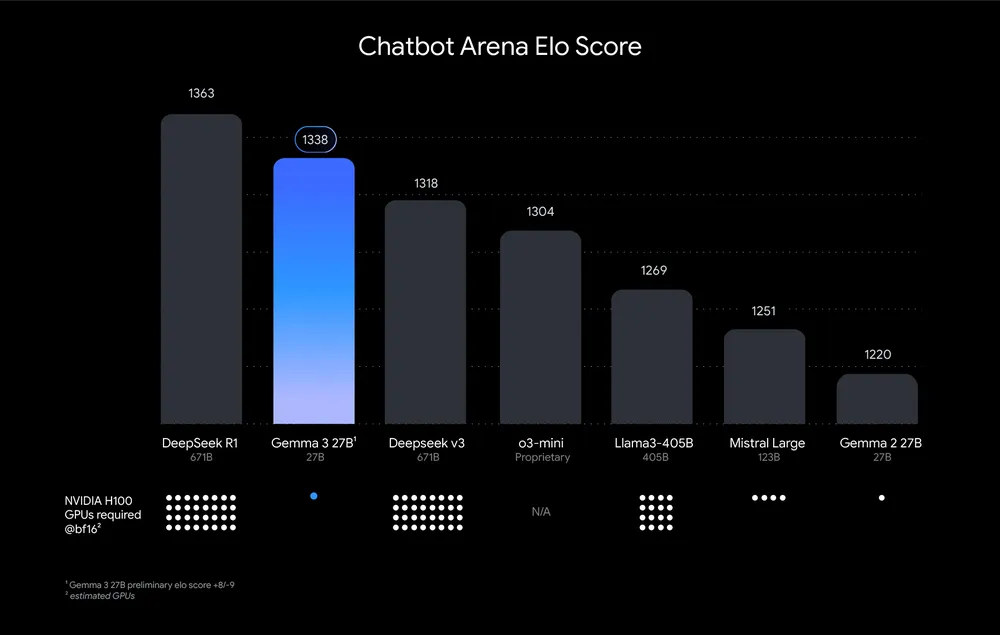

- State-of-the-Art PerformanceOutperforms larger models while running on a single GPU or TPU host.

- Multimodal CapabilitiesProcess text and images together for advanced visual reasoning applications.

- 128K Context WindowProcess and understand vast amounts of information for complex tasks.

Gemma 3 Key Benefits

Discover what makes Gemma 3 the ideal choice for your AI development projects

Getting Started with Gemma 3

Build powerful AI applications in four simple steps:

Gemma 3 Advanced Features

Everything you need to build cutting-edge AI applications

Multimodal Processing

Analyze images and text together for comprehensive understanding and reasoning.

128K Token Context

Process documents, conversations, and data with Gemma 3's expanded context window.

140+ Languages

Build globally accessible applications with extensive language support.

Function Calling

Create AI agents that can interact with external tools and APIs.

Quantized Models

Deploy efficiently with optimized models that reduce computational requirements.

Responsible AI

Benefit from Google's comprehensive safety measures and responsible AI practices.

Frequently Asked Questions about Gemma 3

Have another question? Contact us on Discord or by email.

What is Gemma 3?

Gemma 3 is Google's most advanced open AI model, built from the same research and technology that powers Gemini 2.0. It offers state-of-the-art performance in a lightweight package that can run on a single GPU or TPU.

What sizes does Gemma 3 come in?

Gemma 3 is available in four sizes: 1B, 4B, 12B, and 27B parameters, allowing you to choose the best model for your specific hardware and performance needs.

Does Gemma 3 support multiple languages?

Yes, Gemma 3 offers out-of-the-box support for over 35 languages and pretrained support for over 140 languages, making it ideal for building globally accessible applications.

What is the context window size of Gemma 3?

Gemma 3 features a 128K token context window, allowing your applications to process and understand vast amounts of information for complex tasks.

Does Gemma 3 support multimodal inputs?

Yes, Gemma 3 can process both text and images, enabling applications with advanced visual reasoning capabilities. The 4B, 12B, and 27B models all support vision.

How can I deploy Gemma 3?

Gemma 3 offers multiple deployment options, including Google GenAI API, Vertex AI, Cloud Run, Cloud TPU, and Cloud GPU. It also integrates with popular frameworks like Hugging Face, JAX, PyTorch, and Ollama.

Is Gemma 3 available for commercial use?

Yes, Gemma 3 is provided with open weights and permits responsible commercial use, allowing you to tune and deploy it in your own projects and applications.

How does Gemma 3 compare to other models?

Gemma 3 delivers state-of-the-art performance for its size, outperforming larger models like Llama-405B, DeepSeek-V3, and o3-mini in preliminary human preference evaluations.

Does Gemma 3 support function calling?

Yes, Gemma 3 supports function calling and structured output to help you automate tasks and build agentic experiences.

Are there quantized versions of Gemma 3 available?

Yes, Gemma 3 introduces official quantized versions, reducing model size and computational requirements while maintaining high accuracy.

Start Building with Gemma 3 Today

Unlock the power of Google's most advanced open AI model